The bots are here…and they can code! AI tools like ChatGPT from OpenAI have burst onto the market and have quickly shown their utility when it comes to application development. Just like object-oriented programming, WYSIWYG (“what you see is what you get”) GUI builders, low-code/no-code tools and even Stack Overflow or Stack Exchange, AI tools help developers cut down on repetitive, manual coding. This means developers can do more with less, really quickly, as ChatGPT can churn out code faster than a human can type. And it frees up time for developers to spend on higher-value, advanced functionality.

AI isn’t there to replace humans, though, and it likely won’t take away work from you as a developer. On the contrary, you may be faced with growing pressures to do more, faster, because this tool boosts your productivity. As your organization put more work on your plate, you’ll need to ensure you’re not missing any important security validations when it comes to using code produced by ChatGPT or other AI-powered tools. Because at the end of the day, the machines won’t be the ones held accountable for the code they write. That accountability rests with the developers, and it’s your responsibility to ensure any machine-generated code is correct. In this blog, we’ll cover some examples of how ChatGPT can be used by developers, potential pitfalls to watch out for and some security best practices to keep in mind when using it.

Software Development With AI-powered Tools

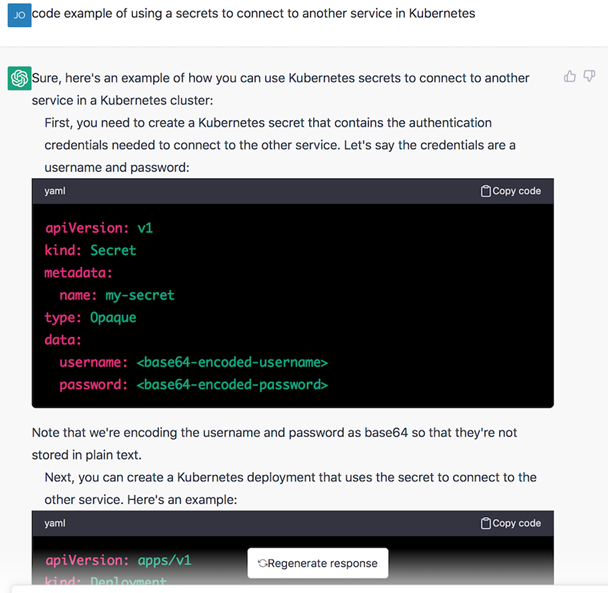

The promise of ChatGPT is that it really can cut down on the time it takes you to write code. Specify the programming language you’re working in and the task you’d like the code to complete, and you’re off to the races. This removes a lot of the mundane, repetitive coding that may have bogged you down in the past. Let’s take a look at an example where I asked ChatGPT to write some code to connect to a Kubernetes (k8s) service using Kubernetes secrets.

The AI was very helpful, providing detailed instructions, example code and configuration updates that I needed to make. Pretty cool, right? By leveraging ChatGPT, you can cut out a lot of time you may have spent trawling Stack Overflow and other forums looking for the right snippet for what you need to accomplish. And that time can then be spent building more complex applications and shipping out new features faster.

Never Trust, Always Verify

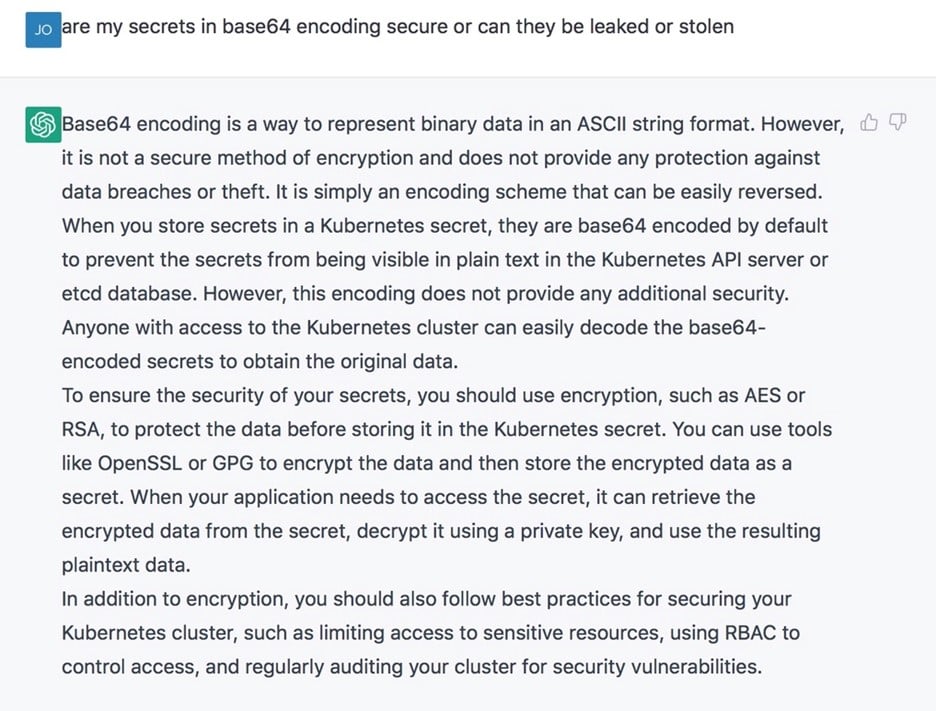

But there’s a catch. Did you notice anything wrong with the above code example? Here is a clue: the bot says, “Note that we’re encoding the username and password as base64 so that they’re not stored in plain text.” Writing secrets in plain text is a major security vulnerability, so ChatGPT used base64 encoding instead, but this isn’t any better because base64 does not encrypt or protect secrets. The AI would have easily convinced many developers that their secrets are now protected with base64 with the above statement. Even now, it is a common misconception that secrets stored in Kubernetes Secrets are secure when, in fact, they are only base64 encoded. I even asked ChatGPT if it knew base64 encoding was secure, and it said, “It is not a secure method of encryption and does not provide any protection against data breaches or theft.”

Now let’s compare this to finding the solution on Stack Exchange:

As you can see above, multiple users answered the question, but the best answer has the highest score, and we can see the reputation score of the person answering the question.

ChatGPT, on the other hand, only presents you with only one answer and one source (ChatGPT) to your requirements, with clear conviction that this is the best answer. Sure, you may check that it’s right the first few times, but once the newness wears off and you’re using ChatGPT or a different AI-powered tool on a massive scale to enable you to work faster (and if you’re under more pressure to deliver higher volumes of work), you may start skipping that validation step. The challenge is that the code that ChatGPT has given you is not flawless and may have vulnerabilities or errors mistakenly included.

This is a tool in beta, after all. And it’s not just machines that make these types of mistakes. We’ve got years of examples of times when code was copied over without being validated and accidentally exposed systems and resources. Take the Heartbleed exploit, which sat out there for years, quietly used by attackers and exposing hundreds of thousands of websites, servers and devices that used the compromised code. Or remember the Equifax data breach? That was caused by a security exploit in Apache Struts that Equifax had failed to patch in time.

In these cases where exploits were buried in widely used code, developers often think, “I don’t have to check this. It’s used by everyone, surely someone has checked it.” That same false sense of security can happen when using code from ChatGPT. It’s a matter of software supply chain security: you’re including code generated from somewhere else, and if that code is compromised, then your application is compromised as well.

There are some other potential pitfalls as well. While OpenAI has included safeguards in the tool that should in theory protect against it generating answers used for problematic scenarios like code injection, our CyberArk Labs team was able to use ChatGPT to create polymorphic malware. Just as we’re using AI-powered tools to innovate and move faster, so are attackers. These attackers could potentially use ChatGPT to figure out ways to automate their manual tasks as well – like crawling code to find exposed secrets.

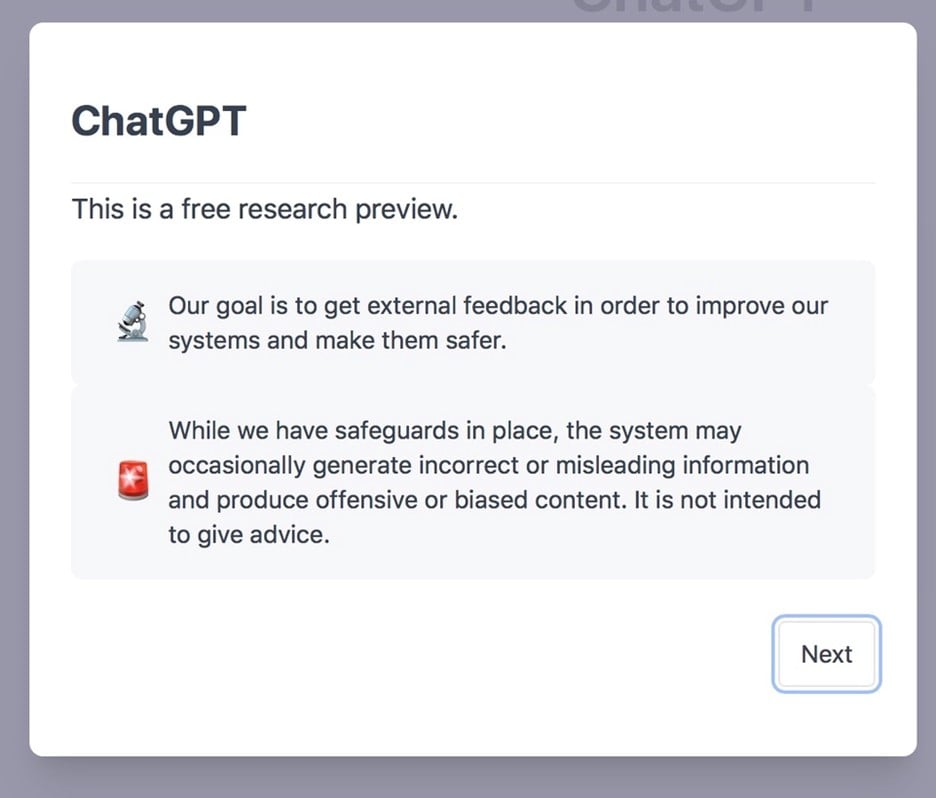

Additionally, since ChatGPT is in the beta stage, there are some bugs. It’s been known to spit out false answers if it gets confused, presenting them as facts. There is even a disclaimer to that effect when you first start using the tool.

Best Practices When Using Code from AI Tools

With these potential security risks in mind, here are some important best practices to follow when using code generated by AI tools like ChatGPT.

- Take what’s produced by ChatGPT as a suggestion that should be verified and checked for accuracy. Resist the temptation to copy it over without evaluation in the name of speed.

- Check the solution against another source like a community that you trust or friends.

- Make sure the code follows best practices for granting access to databases and other critical resources, following the principle of least privilege.

- Check the code for any potential vulnerabilities.

- Be aware of what you’re putting into ChatGPT as well. There is a question of how secure the information you put into ChatGPT is, so be careful when using highly sensitive inputs.

- Ensure you’re not accidentally exposing any personal identifying information that could run afoul of compliance regulations like GDPR.

Accountability Sits With You

AI-powered tools like ChatGPT are another great addition to your arsenal as a developer to boost productivity and take out some of the manual, repetitive work when it comes to coding. But now that those manual tasks are taken away, you will have new responsibilities: validating the outputs of these tools and ensuring that the code is following security best practices. Your scope of responsibility is changing, and you’ll need to stay educated on the measures to take to ensure your code is secure and not exposing any vulnerabilities. Because at the end of the day, the human developer is still responsible for the code produced.

Want to uplevel your security knowledge and stay up-to-date on best practices? Check out these resources:

- Get started by reading these tips for following security best practices with secrets management.

- Take your skills to the next level with interactive tutorials for securing Kubernetes, Ansible and CI/CD pipelines.

- Find additional tutorials and educational material on secrets management for developers.

John Walsh has served the realm as a lord security developer, product manager and open source community manager for more than 15 years, working on cybersecurity products such as Conjur, LDAP, Firewall, JAVA Cyptography, SSH, and PrivX. He has a wife, two kids, and a small patch of land in the greater Boston area, which makes him ineligible to take the black and join the Knight’s Watch, but he’s still an experienced cybersecurity professional and developer.